The Real Spectral Theorem

Let’s take the last couple lemmas we’ve proven and throw them together to prove the real analogue of the complex spectral theorem. We start with a self-adjoint transformation on a finite-dimensional real inner-product space

.

First off, since is self-adjoint, we know that it has an eigenvector

, which we can pick to have unit length (how?). The subspace

is then invariant under the action of

. But then the orthogonal complement

is also invariant under

. So we can restrict it to a transformation

.

It’s not too hard to see that is also self-adjoint, and so it must have an eigenvector

, which will also be an eigenvector of

. And we’ll get an orthogonal complement

, and so on. Since every step we take reduces the dimension of the vector space we’re looking at by one, we must eventually bottom out. At that point, we have an orthonormal basis of eigenvectors for our original space

. Each eigenvector was picked to have unit length, and each one is in the orthogonal complement of those that came before, so they’re all orthogonal to each other.

Just like in the complex case, if we have a basis and a matrix already floating around for , we can use this new basis to perform a change of basis, which will be orthogonal (not unitary in this case). That is, we can write the matrix of any self-adjoint transformation

as

, where

is an orthogonal matrix and

is diagonal. Alternately, since

, we can think of this as

, in case we’re considering our transformation as representing a bilinear form (which self-adjoint transformations often are).

What if we’ve got this sort of representation? A transformation with a matrix of the form must be self-adjoint. Indeed, we can take its adjoint to find

but since is diagonal, it’s automatically symmetric, and thus represents a self-adjoint transformation. Thus if a real transformation has an orthonormal basis of eigenvectors, it must be self-adjoint.

Notice that this is a somewhat simpler characterization than in the complex case. This hinges on the fact that for real transformations taking the adjoint corresponds to simple matrix transposition, and every diagonal matrix is automatically symmetric. For complex transformations, taking the adjoint corresponds to conjugate transposition, and not all diagonal matrices are Hermitian. That’s why we had to expand to the broader class of normal transformations.

Are you on your way to the Jones polynomial via von Neumann algebras? Are we then 12-24 months out from the HOMFLY polynomial?

I will, eventually, get to a fair bit of knot theory. I’ve got plenty of background to do some quantum topological approaches, but I still can’t even define a knot (as opposed to a knot diagram). I need more topology and differential geometry for that.

And I need multivariable calculus to do differential geometry.

And I need linear algebra to do multivariable calculus.

😀

I really can’t relate this kind of math topics. hehe. I am so weak on mathematics subjects. haha.

First, let me say that I think these notes on mathematics are on the whole really well done, and that they will provide a valuable online resource for students of mathematics for years to come.

Don’t take this as criticism, but there is one background fact which underlies this exposition of spectral theory, and which still remains to be proven (speaking intra this blog): the fundamental theorem of algebra. Most authors are content to just assume this and get on with business. But there’s another approach available to teachers of linear algebra, which can easily be geometrically motivated for students who have had some multivariable calculus and in particular the method of Lagrange multipliers, and which circumvents the FTA.

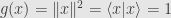

The idea is that if is a self-adjoint transformation, then level sets of the real-valued quadratic form

is a self-adjoint transformation, then level sets of the real-valued quadratic form

are described by “ellipsoids” whose principal axes are in the directions of eigenvectors which can be used in a diagonalization of (not literally ellipsoids of course except in the case of definite forms, but that’s alright). The eigenvalues (i.e. the diagonal entries in the diagonalization) are related to lengths of the principal radii; alternatively, they correspond to critical values of Lagrange multipliers used to solve an optimization problem.

(not literally ellipsoids of course except in the case of definite forms, but that’s alright). The eigenvalues (i.e. the diagonal entries in the diagonalization) are related to lengths of the principal radii; alternatively, they correspond to critical values of Lagrange multipliers used to solve an optimization problem.

Thus, consider the problem of maximizing the function subject to the constraint

subject to the constraint  . It’s clear from the extreme value theorem that such a maximum is attained. At such a critical point

. It’s clear from the extreme value theorem that such a maximum is attained. At such a critical point  , we will have

, we will have

for some critical value ; a short calculation of the gradients yields

; a short calculation of the gradients yields

whence is an eigenvector of

is an eigenvector of  , with corresponding eigenvalue

, with corresponding eigenvalue  . Notice this approach shows that an eigenvector exists! Then, the same argument can be applied to the restriction of

. Notice this approach shows that an eigenvector exists! Then, the same argument can be applied to the restriction of  to the orthogonal complement of

to the orthogonal complement of  , and so on. In this way, appeal to the FTA is averted, and there is also some nice geometric insight in this approach.

, and so on. In this way, appeal to the FTA is averted, and there is also some nice geometric insight in this approach.

One can easily extend the result to normal operators : apply the spectral theorem (whose proof is indicated in this comment) to the real and imaginary parts

: apply the spectral theorem (whose proof is indicated in this comment) to the real and imaginary parts  ,

,  , which are self-adjoint. These parts commute exactly when

, which are self-adjoint. These parts commute exactly when  is normal, and one proves the fact that commuting self-adjoint operators are simultaneously diagonalizable.

is normal, and one proves the fact that commuting self-adjoint operators are simultaneously diagonalizable.

Again, this isn’t criticism of the present approach; I just happen to like this alternative approach both for the economy and the geometric insight, and I thought some of your readers might like it too.

Oh sure. I don’t even need Lagrange multipliers, really. All I need is the theorem which states that level sets are closed. Then the unit sphere is compact and thus any continuous real-valued function must take a maximum and a minimum.

Again, the problem is that we need some amount of geometry, to pursue the geometric viewpoint. Now, I could switch back and forth a lot more between the multivariable calculus and the linear algebra, but I find it less confusing to just plunge straight through all of this right now and then swap back the analytic side.

The mention of Lagrange multipliers was not idle. One needs more than the extreme value theorem whose proof you just outlined — one needs the crucial fact that the extremizing point is in fact an eigenvector. “Lagrange multipliers” are a rubric for what is needed here.

I wouldn’t consider any of this a problem, but rather a delightful alternative point of view!

[…] Singular Value Decomposition Now the real and complex spectral theorems give nice decompositions of self-adjoint and normal transformations, […]

Pingback by The Singular Value Decomposition « The Unapologetic Mathematician | August 17, 2009 |

[…] Here’s a neat thing we can do with the spectral theorems: we can take square roots of positive-semidefinite transformations. And this makes sense, since […]

Pingback by Square Roots « The Unapologetic Mathematician | August 20, 2009 |

[…] if we work with a self-adjoint transformation on a real inner product space we can pick orthonormal basis of eigenvectors. That is, we can find an orthogonal transformation and a diagonal transformation so that […]

Pingback by Decompositions Past and Future « The Unapologetic Mathematician | August 24, 2009 |

[…] form. Further, Clairaut’s theorem tells us that it’s a symmetric form. Then the spectral theorem tells us that we can find an orthonormal basis with respect to which the Hessian is actually […]

Pingback by Classifying Critical Points « The Unapologetic Mathematician | November 24, 2009 |